It is reported that Amazon has reached a five-year cooperation agreement with Databricks, which aims to reduce the cost of enterprises to build their own artificial intelligence (AI) capabilities by using Amazon's Trainium AI chips. Databricks will leverage Amazon's Trainium chips to enhance its AI services, with plans to increase the use of Nvidia GPUs through Amazon Web Services (AWS). This collaboration further strengthens Nvidia's position as a leader in high-performance computing hardware for AI applications. Databricks is a leading data and AI company that provides a cloud-based platform to help enterprises manage and analyze big data and machine learning workloads. By partnering with Amazon, Databricks has access to Amazon's custom chips designed specifically for AI, such as AWS's Trainium and Inferentia, which are part of Amazon's Web Services (AWS) strategy to provide an alternative to Nvidia's GPUs.

Amazon's Trainium AI chips offer a more economical alternative to Nvidia GPUs, which dominate the AI chip market. Amazon claims that customers who use its own chips can save up to 40 percent compared to competitors. The formation of this strategic alliance reflects the growth of the demand for AI chips and the intensification of the competitive landscape. Ali Ghodsi, CEO of Databricks, expects that the price of AI chips may fall in the coming year due to the rebalancing of supply and demand, a change that could have a significant impact on the business strategies of tech companies.

In addition, Nvidia's dominance in the AI chip market is also facing challenges from multiple aspects. In addition to the partnership between Amazon and Databricks, other companies such as AMD, Intel, and some start-ups are also vying for a share of the AI chip market. Nvidia currently controls about 70 to 95 percent of the AI chip market, and its flagship AI graphics processing units (GPUs) such as the H100, coupled with the company's CUDA software, give Nvidia a huge head start over the competition. However, Nvidia CEO Jensen Huang also expressed concern that the company is losing ground, acknowledging that there are many strong competitors on the rise.

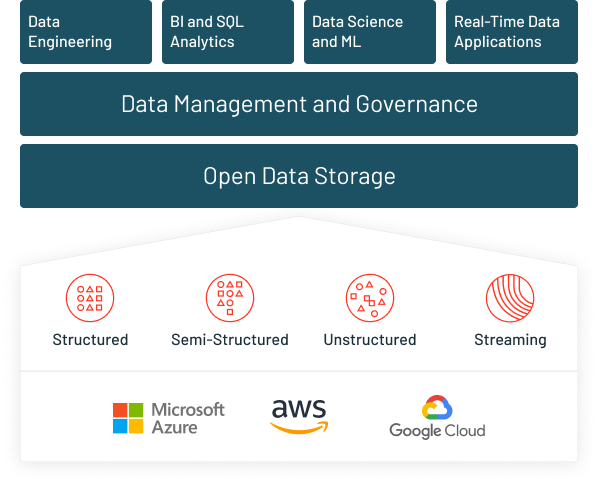

Figure: Amazon has reached an AI chip deal with Databricks in hopes of closing the gap with Nvidia

Key takeaways from Amazon's partnership with Databricks include:

1. AI chip development: Amazon's AI custom chips, such as Trainium (for training AI models) and Inferentia (for inference tasks), are designed to provide cost-effective and scalable solutions for AI workloads. These chips are designed to compete with Nvidia's GPUs, especially among customers using AWS cloud infrastructure.

2. Databricks integration: By leveraging Amazon's AI chips, Databricks can boost the performance of its machine learning and data analytics platform. This partnership is especially important for businesses looking for more efficient, lower-cost hardware that allows them not to rely solely on Nvidia's GPUs.

3. Closing the AI computing gap: Nvidia's GPUs have become the industry standard for AI workloads, but Amazon's partnership with Databricks is an important step in its attempt to close the gap, helping Amazon not only diversify its AI portfolio but also provide customers with more options for AI training and inference hardware by providing alternative silicon solutions.

4. AI Innovation in the Cloud: This collaboration is expected to drive innovation in AI solutions in the cloud, combining Databricks' expertise in big data management with Amazon's strengths in AI chip design and cloud services to meet the growing demand for AI computing and provide more efficient and scalable solutions.

Amazon's partnership with Databricks marks an intensification of competition in the AI chip market, while also showing Amazon's ambitions in the field of AI technology. By providing more cost-effective AI chips, the partnership between Amazon and Databricks could challenge the existing market leader Nvidia and drive the industry toward more efficient and cost-effective AI solutions.