Over the past decade, two technology areas have grown rapidly: artificial intelligence (AI) and the Internet of Things (IoT). AI systems excel in tasks such as data analysis, image recognition, and natural language processing, and have gained significant recognition in academia and industry. At the same time, advances in electronics have made it possible to miniaturize devices, creating compact IoT devices that can connect to the Internet. Experts foresee a future where IoT devices will be ubiquitous and form the basis of a highly connected ecosystem. The convergence of AI and IoT is a fast-growing field, which involves many aspects such as technological innovation, application expansion, and future trends. With the continuous maturity of edge computing, 5G technology, and intelligent applications, AI and IoT will have a more far-reaching impact on smart living, smart cities, and smart industry. The combination of edge computing and 5G technology will become a major trend in the future, and the development of self-learning and adaptive systems is also an important technology trend. However, integrating AI capabilities into IoT edge devices presents significant challenges. Artificial neural networks (ANNs), as an important part of AI, usually require a lot of computing power. In contrast, IoT edge devices are designed to be small and resource-constrained, with limited power, processing power, and circuit space. As a result, developing ANNs that can learn effectively and operate within these limits becomes a major challenge.

To solve this problem, Professor Takayuki Kawahara and Yu Fujiwara of Tokyo University of Science are also actively working on innovative solutions. In their recent study, published in IEEE Access on October 8, 2024, they introduced a novel training algorithm for a special type of ANN – Binary Neural Network (BNN) – and demonstrated a unique implementation of this algorithm in an advanced computational memory (CiM) architecture for IoT devices.

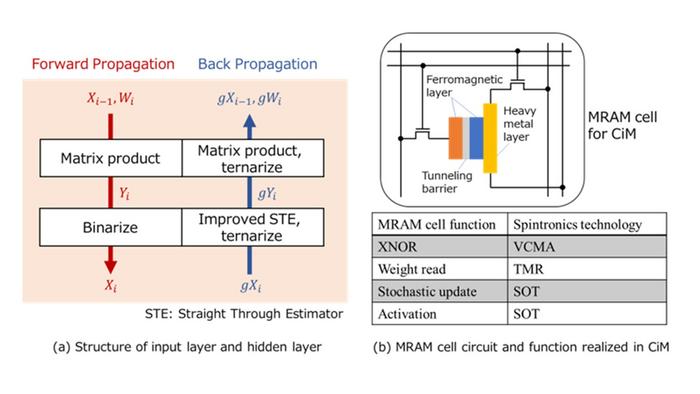

The neural network structure employs a three-value gradient in backpropagation (training), which reduces computational complexity. In addition, the research team developed a novel magnetic RAM unit using spintronics to implement their technology in the CiM architecture.

"BNNs use only -1 and +1 weights and activation values, which greatly reduces the computational resources required by the network and compresses the smallest unit of information to a single bit," Kawahara explains.”

Figure: Convergence of AI and IoT (Source: IEEE Access)

To address this challenge, the researchers developed a new training algorithm called Three-Value Gradient BNN (TGBNN), which contains three key innovations. First, it uses a three-value gradient during training while keeping the weights and activation values binary. Second, they enhanced the Direct Pass Estimator (STE), improving the control of gradient backpropagation to ensure efficient learning. Finally, they employ a probabilistic approach to update the parameters by exploiting the behavior of the MRAM unit.

Subsequently, the research team implemented this new TGBNN algorithm in the CiM architecture, a modern design concept that optimizes space and power consumption by performing calculations directly in memory rather than through a dedicated processor. They developed a new XNOR logic gate as a fundamental component of magnetic random access memory (MRAM) arrays, utilizing magnetic tunnel junctions to store information.

To change the values stored in a single MRAM cell, the researchers employed two mechanisms: spin-orbit torque, which is the force generated when an electron spin current is injected into the material; and voltage-controlled magnetic anisotropy, which refers to the energy barrier that changes between different magnetic states in a material. With these methods, the size of the product's multiplication and calculation circuit is reduced to half that of a conventional cell.

The research team tested the performance of an MRAM-based CiM system using the MNIST handwriting dataset, which contains images of handwritten digits that the ANN needs to recognize. "The results show that our three-valued gradient BNN achieves an accuracy of more than 88% when using error-correcting output code-based (ECOC)-based learning, while being comparable to the accuracy of a conventional BNN of the same structure, and achieving faster convergence during training," Kawahara noted. "We believe that our design will enable BNNs on edge devices to operate efficiently, maintaining their ability to learn and adapt."

This breakthrough is expected to drive the development of powerful IoT devices that will enable them to make more efficient use of AI. This progress has important implications for several fast-growing areas. For example, wearable health monitoring devices may become smaller, more efficient, and more reliable, and can function without constant cloud connectivity. Similarly, smart homes are capable of performing more complex tasks and responding more sensitively. In these applications, as well as in all other possible use cases, the proposed design may also contribute to the achievement of sustainability goals by reducing energy consumption.