AMD is widely seen as the only company that can challenge Nvidia's dominance in the AI GPU market. At the recent Advancing AI Conference in San Francisco, CEO Lisa Su joined key customers and partners including Microsoft, OpenAI, Meta, Oracle, and Google Cloud to unveil a range of latest AI and high-performance computing solutions. These announcements include fifth-generation EPYC server processors and the Instinct MI325X family of accelerators.

In her keynote speech at the conference, Lisa Su showcased a range of new products that improve computing power and energy efficiency, as well as innovative AI solutions for businesses and consumers. These new products include the EPYC 9005 series processors, the Instinct MI325X accelerator, the Pensando Salina DPU, and the Pensando Pollara 400 networking product.

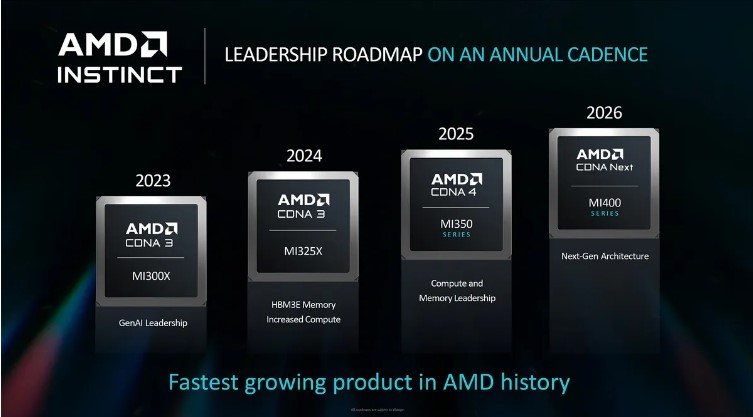

The most notable announcements are the upcoming MI325X, MI350 and MI400 accelerator series. They will be released in Q4 2024, mid-2025, and 2026, respectively. Among them, the MI350 will be powered by TSMC's 3nm process, while the MI325X will compete directly with Nvidia's much-talked-about H200 accelerator.

In his speech, Lisa Su said, "Data centers and artificial intelligence present tremendous growth opportunities for AMD. We're rolling out powerful EPYC processors and AMD Instinct accelerators for a growing number of customers. Looking ahead, we expect the data center AI accelerator market to grow to $500 billion by 2028. We are committed to driving open innovation at scale with scaled silicon, software, networking, and cluster-scale solutions.”

MI325X vs Nvidia H200

Notably, AMD's data center segment achieved record revenue of $2.8 billion in the second quarter of 2024, up 115% year-over-year, primarily due to a significant increase in Instinct GPU shipments, which account for about half of total revenue. AMD raised its 2024 data center GPU revenue forecast to $4.5 billion from $4 billion.

Since its release in December 2023, the AMD Instinct MI300X accelerator has been widely adopted by leading cloud service providers, OEMs, and ODM partners, and powers popular AI models for millions of users every day, including OpenAI's ChatGPT, Meta's Llama, and millions of open-source models on the Hugging Face platform.

According to AMD, the MI325X is powered by TSMC's 5nm process technology, which is capable of delivering superior memory efficiency and AI computing power for high-density AI tasks. Equipped with 256GB of HBM3E memory with 6.0TB/s of memory throughput, the MI325X has 1.8x the memory capacity and 1.3x the bandwidth of the Nvidia H200. Both FP16 and FP8 have 1.3 times the theoretical peak computing power of H200. With these improvements, MI325X delivers 1.3x, 1.2x, and 1.4x faster inference performance when running tasks such as Mistral 7B FP16, Llama 3.1 70B FP8, and Mixtral 8x7B FP16, respectively.

The MI325X is expected to be widely available in Q1 2025 at platform vendors including Dell, Eviden, Gigabyte, HPE, Lenovo, and Supermicro, with volume production and shipments scheduled to begin in Q4 2024.

Figure: AMD Instrinct Direction (Photo: AMD)

Advancements in the MI350 and MI400 series

The MI350 series is based on AMD's CDNA 4 architecture and is based on TSMC's 3nm process and will debut in mid-2025. The MI350 series delivers up to 35x faster inference performance than the MI300 series based on the CDNA 3 architecture and further establishes its dominance in memory capacity, with up to 288GB of HBM3E memory per accelerator.

Driven by software innovation and an open ecosystem

AMD is continuing to advance its ROCm open-source software stack by incorporating new capabilities to support training and inference for generative AI workloads. ROCm 6.2 now supports essential AI features such as FP8 data types, Flash Attention 3, and Kernel Fusion. ROCm 6.2 delivers a 2.4x improvement in inference performance and a 1.8x improvement in LLM training performance compared to ROCm 6.0.

The launch of AI networking solutions

Today, AMD is leveraging hyperscale computing platforms to deliver a comprehensive range of programmable DPU products to power next-generation AI networks. The AI network consists of a front-end (which provides data and information to the AI cluster) and a back-end (which manages the transfer of data between accelerators and clusters), ensuring that the CPUs and accelerators in the AI infrastructure can work together efficiently.

To that end, AMD has released the Pensando Salina DPU for front-end network management and the Pensando Pollara 400 AI NIC for back-end network optimization. The Pensando Salina DPU is a third-generation programmable DPU that doubles the performance, bandwidth, and scale of the previous generation, supporting 400G throughput and dramatically increasing data transfer speeds. Powered by the AMD P4 programmable engine, the Pensando Pollara 400 is the industry's first UEC-enabled AI NIC designed to improve data communication between accelerator clusters.

Both products are expected to be sampled in the fourth quarter of 2024 and are scheduled to be officially launched in the first half of 2025.

Future outlook

In the personal computing space, AMD's Ryzen AI PRO 300 series notebook processors have also received significant improvements. Manufactured using TSMC's 4nm process, the series is based on the Zen 5 and XDNA 2 architectures, which significantly enhances battery life and improves safety and performance. This processor is already used in the first Microsoft Copilot+ notebook designed by enterprises.