Recently, Bill Schweber, a senior electronics ·engineer, published an article in EEtimes titled "How Much Electricity Does Artificial Intelligence Computing Really Need?" 1" article. In this article, the author, who has been deeply involved in the field of technology for many years, explores the power consumption required for artificial intelligence (AI) computing, and provokes a reflection and discussion on the energy needs of AI development in the future. China Overseas Semiconductor Network will read this article with you today.

At the beginning of the article, Schweber cleverly quotes the classic words of the famous baseball player Yogi Berra: "It is very difficult to predict the future", directly pointing to the fact that the future power demand of artificial intelligence is not yet known. Although this question is widely discussed, the actual answer is fraught with uncertainty. The author criticizes some current market research and media reports that create panic conclusions about power shortages through incomplete data or inferences, such as those issued by Goldman Sachs, Bloomberg, the International Energy Agency, etc.

Schweber points out that these forecasts often rely on a "trend extension approach", which simply extends existing trends into the future, ignoring real-world technological inflection points, market events, and unpredictable factors. In particular, such predictions are often used in the media to induce anxiety in order to attract more attention and funding. Even the data mentioned in individual reports that "ChatGPT consumes more electricity than Google searches" is easy to mislead the public without an overall context.

The author does not completely dismiss the possibility that AI may increase power consumption in the future, but he emphasizes that AI algorithms and their associated hardware chips are constantly becoming more efficient. For example, Google's next-generation TPU processors have significantly improved efficiency, and the energy consumption for training AI models has dropped by hundreds of times compared to 2016. Schweber believes that while the demand for AI power is indeed worth paying attention to, it is not growing at a rate and scale that is "about to swallow up the world".

A rational interpretation of AI power consumption

A key takeaway from the article is that while the power consumption of AI is a potential problem, it does not mean that its impact will be as large and uncontrollable as some predictions claim. Schweber cites the example of Koomey's law that advances in computer efficiency may offset some of the increase in energy demand. In addition, the electricity demand for AI technology is currently not a large proportion of the world's total electricity consumption, and although the power consumption of data centers is rising, the proportion of AI is relatively small.

At the same time, the authors point out that there are many unknown factors in the market that can have a significant impact on electricity consumption, such as technological breakthroughs, changes in the socio-political environment, etc. None of these factors can be accurately calculated with current predictive models. Therefore, Schweber advises that we should not overly rely on existing data points to extrapolate the future.

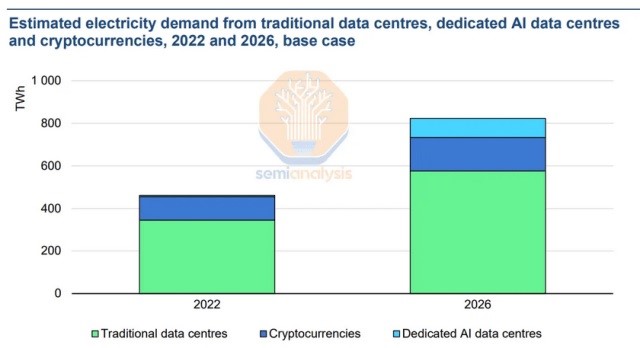

Figure: The electricity consumption of "digital hubs" can be broken down into three main parts: traditional data centers, cryptocurrency mining, and dedicated AI facilities. (Source: IEA via SemiAnalysis)

Reflection and personal insight

In reading Schweber's article, I think his point is not only insightful on a technical level, but also touches on the uncertainty of future technological developments. This uncertainty is not only reflected in the demand for electricity in artificial intelligence, but also reflects one of the major challenges in technology forecasting – we can often only speculate about the future based on current data and trends, but it is difficult to foresee the dramatic changes that technological breakthroughs may bring.

After citing a lot of data and predictions, the article especially emphasizes the role of Koomey's law. I think this is something to think about in particular. As the efficiency of computing devices and AI chips continues to improve, the power demand for data centers and AI computing in the future may not increase linearly, but will evolve in a more complex curve. In other words, while AI applications are rapidly expanding, the increase in electricity consumption may be offset by more efficient technologies. This possibility suggests that forecasts that rely too much on existing trends, especially those that ignore the possibilities of technological advances, are often incomplete.

Another point worth exploring in depth is how the technical, political, and social factors mentioned by Schweber will affect the future of AI power demand. The popularization and promotion of AI technology is not only a technical issue, but also involves global energy policy, supply chain management, and the actual needs of the market. For example, some countries may enact stricter energy policies in the future, pushing for more energy-efficient technologies in AI and data centers. At the same time, changes in the technology supply chain, such as the application of new semiconductor materials, could also significantly impact the energy efficiency of AI computing.

More importantly, the expansion of AI technology will gradually enter a wider range of industries, such as industrial manufacturing, healthcare, autonomous driving, etc., rather than just data centers. This means that in the future, the power consumption of AI technology will be more decentralized and may be shared by the needs of various industries. Therefore, when discussing the power consumption of AI, it is important to consider the power usage of various industries across the ecosystem, rather than focusing solely on data centers.

Eventually, Schweber's reference to Herbert Stein's idea that "unsustainable things will eventually stop" further reinforces caution about future electricity demand. Indeed, many current predictions about AI power consumption tend to be based on a "straight line" mindset, ignoring technology, policy, and market inflection points. While this linear forecasting method is easy to understand, it is difficult to cover the complex real-world situation. As the article says, the short-term future often doesn't unfold as smart people expect.

From this point of view, Schweber's article reminds us that when assessing the impact of AI on electricity demand, we should not easily accept panic-filled predictions, but should focus more on the balancing effects of technological progress and policy changes. As a practitioner or observer, we need to always be open to technological progress, be wary of exaggerated statements, and always use critical thinking to analyze possible future trends. This applies not only to the field of artificial intelligence, but also to the semiconductor industry as a whole and other technology-driven industries.

In conclusion, Schweber's article provides a rational, pragmatic perspective and reminds us to be cautious in the face of uncertainty about future technological developments. This is a valuable perspective for anyone who cares about AI and its associated energy needs.

Summary

Bill Schweber's How Much Electricity Does AI Computing Really Need? Through clear logic and detailed data analysis, it reveals the current controversy and confusion about AI power consumption. He reminded us not to be misled by over-exaggerated predictions, but to take a rational view of the future development of AI technology and its impact on electricity demand. For readers, this article is an enlightening and practical reading experience, prompting us to look more rationally at the challenges and opportunities brought about by technological progress. Original version: How Much Power Will AI Computing Actually Require?