AlphaChip is a revolutionary approach to artificial intelligence developed by Google's DeepMind that has revolutionized the way computer chips are designed. This technology originally started as a research project in 2020 and has now evolved into a reinforcement learning method for designing chip layouts. AlphaChip's ability to accelerate and optimize the chip design process using artificial intelligence has already been used in the last three generations of Google's own Tensor Processing Unit (TPU). A TPU is a GPU-like chip designed to accelerate AI operations.

Computer chips have driven significant advances in artificial intelligence, and AlphaChip uses artificial intelligence to accelerate and optimize chip designs.

At the heart of AlphaChip is a novel "edge-based" graph neural network that learns the relationships between chip components and is able to generalize between different chips to improve with the layout of each design. The design process for AlphaChip begins with a blank grid, placing circuit components one by one until all components are placed, and then rewards are given based on the quality of the final layout. This novel "edge-based" graph neural network allows AlphaChip to learn the relationships between chip components and generalize between different chips, allowing for continuous improvement with the layout of each design.

Google's Axion processor, Google's first Arm-based general-purpose data center CPU, is also designed with AlphaChip technology. In addition, Google is also working on a new version of AlphaChip, which is expected to cover the entire chip design cycle from computer architecture to manufacturing.

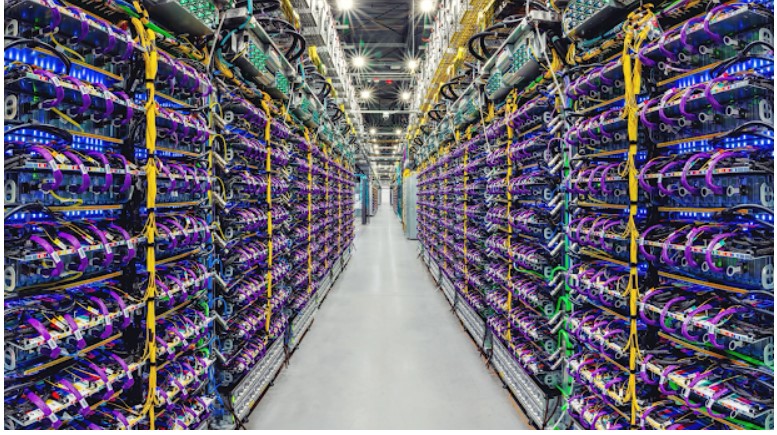

Figure: Google AI Accelerator Supercomputer (Source: Google)

Compared to traditional human engineer designs, AlphaChip can produce high-quality chip layouts in hours, while human engineers typically take weeks or months to complete the same job. Google claims that AlphaChip not only speeds up the design cycle, but also improves the performance of the chip. In addition, Google made AlphaChip's pre-training checkpoints public and shared model weights, marking a significant step forward in the company's efforts to promote open collaboration in the field of AI chip design.

Since 2020, AlphaChip has been used to design Google's own TPU AI accelerators, which power many of Google's large AI models and cloud services. These processors run Transformer-based models that power Google's Gemini and Imagen. AlphaChip has improved the design of each generation of TPUs, including the latest 6th generation Trillium chips, to ensure higher performance and faster development. Still, both Google and MediaTek rely on AlphaChip to provide a limited set of modules, while most of the work is still done by human developers.

Google says the success of AlphaChip has sparked a new wave of research to apply AI to different stages of chip design. This includes extending AI technology to areas such as logic synthesis, macro selection, and timing optimization, which are already available at a significant cost while Synopsys and Cadence already offer them. According to Google, researchers are also exploring how AlphaChip's approach could be applied to further stages of chip development.

Google's vision for AlphaChip's future is that AI approaches will automate the entire chip design process, dramatically accelerate design cycles and unlock new areas of performance through end-to-end co-optimization of superhuman algorithms and hardware, software, and machine learning models. They look forward to working with the community to close the loop between AI chips and AI on chips.