Tesla’s Artificial Intelligence Division has created a very special and very powerful; new type of supercomputer and they’re called it Dojo. This is a project that has been quietly evolving behind the scenes at Tesla for years now and Dojo has already change into something that could reinvent the supercomputer as we know it. The AI wars have begun and Dojo might be the best weapon in Tesla’s AI arsenal. AS artificial intelligence becomes a larger part of our daily lives for better or worse, it’s triggered a steep rise in demand for a very specific resource compute because AI isn’t like traditional software it can’t just be written into existence you need a model. You need data and then you need to bring those two elements together in some kind of techno alchemy that I can’t even begin to explain, we typically call this process training but that’s doesn’t really matter because all we’re talking about here is the machine that facilitates the training the supercomputer. Supercomputer have historically been used for very highlevel scientific Endeavors sequencing the human genome predicting the weather understanding the function of diseases ad viruses so the need for a supercomputer was relatively limited. It was a niche product but this is no longer the case now every tech company in the world is competing in an arms race to build out their very own supercomputer cluster and they are using these things expressly for artificial intelligence development the trick to wrapping your head around. How a supercomputer works is to remember that we are not actually talking about one big computer it’s actually a collection of smaller computers that can all work together as a singular machine, the term often used here is parallel computing and the best analog for this is the F1 pit crew when many people attack the problem from multiple angles all at once the work can get done incredibly fast so instead of one big processor trying to root force its way through large number of calculations. The device of choice for this purpose is actually a graphics processing unit or a GPU, or what a lot of people would call a graph. These are great for playing video games and rendering digital video, but they also turned out to be very well adapted to running parallel calculations. This is how Nvidia quickly became the hottest tech company in the world over the past year. They were already in a prime position as an industry leader in GPU technology. They really, really had to, do is optimize their existing design for supercomputer clusters and AI training applications. I'm not a stock analyst by any means, but if you want a S that that that illustrates the AI computing explosion, just look at the 5 years chart of Nvidia stock. Even if you had bought at the height of the pandemic bubble in 2021, you would still have tripled your money already if you've done the same thing with Tesla stock, over half of your money would be gone right now. So that alone is reason enough for Tesla to be throwing that into the supercomputer ring. And in this case, being a little late to the party can prove to be a massive advantage for Tesla's own supercomputer design. Flashback to five years ago and the only people who really cared about Nvidia were hardcore PC gamers feed ending for their next graphics upgrade. But as the AI industry began to emerge out of Silicon Valley Nokia was, quickly finding a vast new market of very high profiled customers. So, all that the company had to do was make an even bigger and more powerful version of the product that all already selling with no expense spared. This gave us the A 100 GPU in the year 2020, which quickly became the benchmark device for AI training. Compute the A 100 was so good that by the summer of 2021, Tesla had installed nearly 6000 of them at the company's data center to help train the full self-adrift beta AI model. At a starting price of $10000. That would have cost Tesla some year $100 million just for the GPU hardware alone. So, it's no surprise that around this time, Tesla was already well into the development of a plan to design and build their own supercomputer. And unlike Nvidia, Tesla was not already invested in any existing computer chip architecture, so they were free to design any kind of chip and computer system that they could imagine, one that was specifically optimized from the ground up for the next generation of high quality performance computing, Tesla has always embraced two very important strategies in their business development. One is called vertical integration, and then the other is first principles thinking. And both apply to Project Dojo. Vertical integration is essentially just bringing as much of your supply chain as possible under your own roof. So Tesla vehicles, for example, own windows. Tesla manufactures a lot of their own battery cells and battery modules. They make their own motors this way. They're never at the mercy of a supplier. They don’t pay a markup on their components, and Tesla always gets exactly what they want from each component. They built it specifically for their own purpose. It’s a lot of upfront cost to establish a vertically integrated company. It didn't happen overnight, but in the long run, it's vastly more sustainable. First principal’s thinking is a all about approaching a unique solution that’s built from the ground up which is not how most people solve problems. The most common reasoning system people use is by analogy, you take something that's already similar to the outcome you want and then modified. The first Tesla roaster is a damper. Take a pre-sexual in sports car and modify it to become a new electric sports car, which is fine as a tech demonstration, but it wasn't a sustainable product. Where the Tesla model is designed from the wheels up to be an electric car and that same vehicle is still on sale to this day. So, there might be something to be this.

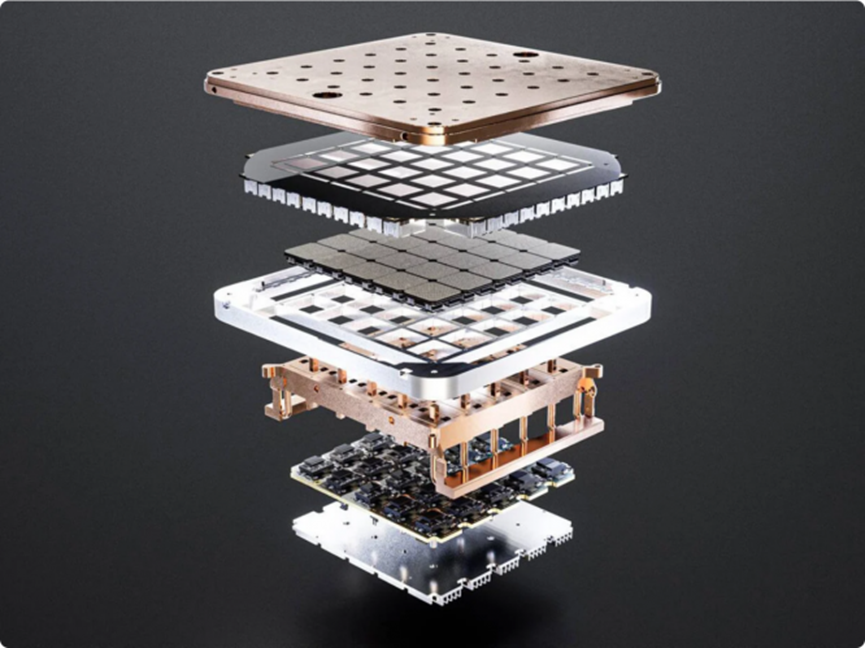

Figure: Tesla supercomputer Dojo

Dojo is a bespoke hardware platform that was designed from the ground up by Tesla's AI division exclusively for use in assist computer vision, video based full self-driving networks the goal being to create a digital duplicate of the human visual cortex and brain function. Then use that to drive a car autonomously. This involves processing vast amounts of visual data, which in this case is video captured by the vehicle cameras. All of that information from billions of frames of digital video needs to be trans, which that the AI model can understand. This is going. And it's exactly what it sounds like. You are just assigning a designation pixels so that the AI knows what it's looking at. The more labels that network has to draw from, the better it's going to get at recognizing patterns and making associations. Dojo exists on its base level as something called a system on a chip, which is an entire computer assembled on one single piece of silicon. This is not exactly new. It's the same kind of system that runs your smartphone. This method allows for a spectacular level of efficiency because instead of having all these PCI ports and wires and motherboards and stuff all connected together, every time that signal moves through a connector, slow down and it loses energy. Now every necessary component lives on the same little square of semiconductor material, meaning that there are 0 bottlenecks between the critical components of the computer system. The Dojo chip is about the size of the palm of your hand, which is a lot smaller than an A 100 GPU, but Dojo isn't supposed to exist as just a single chip Dojo really becomes functional on the top of which is the point where multiple chips are fused together to function as one system. This is how we get that parallel computing aspect that makes this a true supercomputer. With the Dojo title, Tesla has integrated 25 Dojo chips to create one unified computer system that can distribute calculations across a decentralize net work of individual processing chips, and each title contains all of the necessary hardware for power.

Cooling and data transfer it’s a self-sufficient computer in itself made up from 25 smaller computers, then going one level up, they integrate six tiles together into one single rack unit, and then to make one cabinet, they integrate two of the racks into one case. And this is just Dojo version 1. Dojo version 2 is the latest. 6 cut comes from Tesla's chip manufacturing TSMC, the world's largest microchip fabricator. TSMC produced the D1 chip for Tesla that has been operating at the company's data centers over the past two years, and they've just teased a new design for the D2 chip that takes the Doge concept. So D1 was all about assist, which was fitting all of your components onto 1 silicon square the size of a pump, and then linking 25 of those squares together into a tile. But we know that any time you connect separate chips together, you create a bottleneck that slows down information. So the D1 solution is good, but it's flop. D2 is an evolution of that concept that puts the entire dojo tile onto a single wafer of silicon. Stick with me here. This is a silicon wafer. It's a literature material about the size of a dinner plate. This is no, no, no, that all computer chips are built on. The usual thing to do is to assemble as many chips on a single wafer as possible and then cut each chip out like a cookie. That way you maximize the yield of each silicon wafer, you have the ability to just throw away or mark down any of the chips that either failed or don't reach full function. Making chips is hard. Sometimes they don't work and oftentimes they only partially work. Did you know that in some cases, if you are not buying a top-of-the-line CPU, then you are probably getting a partially broken version of it that they didn't want to throw away. That's why most companies don't do system on a wafer. In fact, aside from Tesla, there is only one other chip designer doing wafer scale processing that's cerebrus, and they claim that their third generation ship is now the fastest AI problem on Earth. The cerebrus chip has over 46000 square millimeters of surface area and contains 4 trillion transistors. So what you end up with is one big ass chip that harnesses the power of 25 processors. That can all communicate with zero bottlenecks in between to act like one super processor that means incredibly high bandwidth, incredibly low latency, and superior power efficiency than the D1 tile, or literally any other computer system or world at the same time that they were showing off the new dojo system on a wafer, TSMC used the opportunity to hint at even greater things to come. The chipmaker will be scaling up their technology over the next three years to offer even more advanced wafer scale systems, saying that by 2027, they will be able to provide 40 times more computing power than to systems. So we might as well just declare this game over. Tesla wins, right? Well, not really. There's a pretty big difference between designing a chip or even building a chip and actually deploying said chip in a large scale supercomputer and getting it working as intended. That part is really, really this is why Tesla is still buying Nvidia chips to train their self-driving car AI Tesla never stopped buying Nvidia hardware and they have no plans to stop anytime soon. Tesla activated a new training cluster with 10000 of the latest H-100 GPU, each valued at around 30 grand. It's been recently claimed that Tesla has up to 35000 H-100 S in their possession as of May 2024 and has plans to spend billions more on Nvidia hardware in your year alone. So we have to look at Dojo as a long-term bet and a 51. Even Elon Musk has admitted that Dojo is not a sure thing. It has potential to pay off to the tune of hundreds of businesses of dollars, but could just as easily become another great idea that never went anywhere.