The development of large models has put forward new requirements for AI infrastructure in all aspects, from computing power, data services, platform service models, to hardware and software updates, as well as green and energy-saving development, AI infrastructure needs to be upgraded and optimized accordingly to support the further development and application of AI technology. According to Winsoul Capital, AI hardware infrastructure, advanced packaging, and domestic substitution of high-end manufacturing have become hot spots for semiconductor investment.

The rapid development of AI large models and the increasing demand for computing power

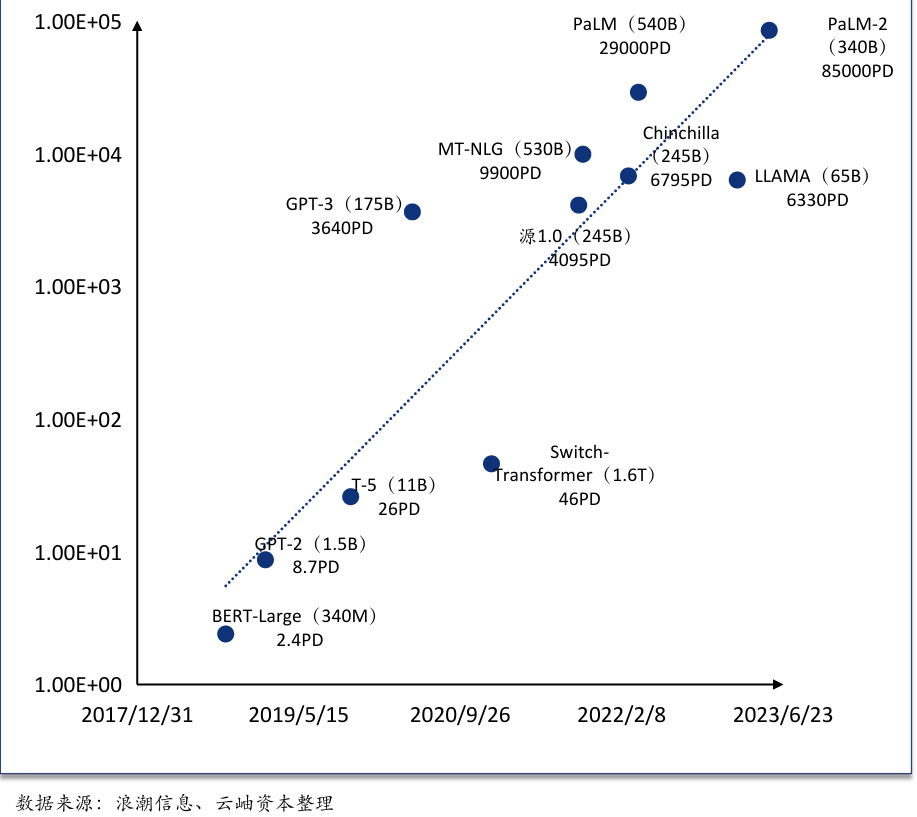

The development of large AI models requires higher computing power. Compared with traditional machine learning models, large AI models typically contain more parameters and more complex network structures, so they require more computing power for training and inference. According to Winsoul Capital, in order to achieve multimodal support, expand the context length, improve the rate limit and other performance improvements, the number of parameters and data used in the pre-training of large models has increased explosively. These increases in computing power demand directly promote the development of related technologies such as high-performance computing and cloud computing.

According to Winsoul Capital, the focus of the development of large models is to improve computing power, and the continuous rise in data volume puts forward higher requirements for computing power, and how to efficiently process and store large-scale data are the two major challenges faced by current computing facilities. With the widespread application of AI technology, more and more enterprises and institutions need to deploy large AI models, resulting in a sharp increase in the demand for computing resources. This shortage of resources has prompted the computing industry to continuously seek new solutions, such as adopting more efficient computing architectures and optimizing algorithms to improve computing efficiency and resource utilization.

Figure: The rapid development of AI large models and the increasing demand for computing power

The proportion of CPU value in AI scenarios has increased, and the penetration of ARM processors has accelerated

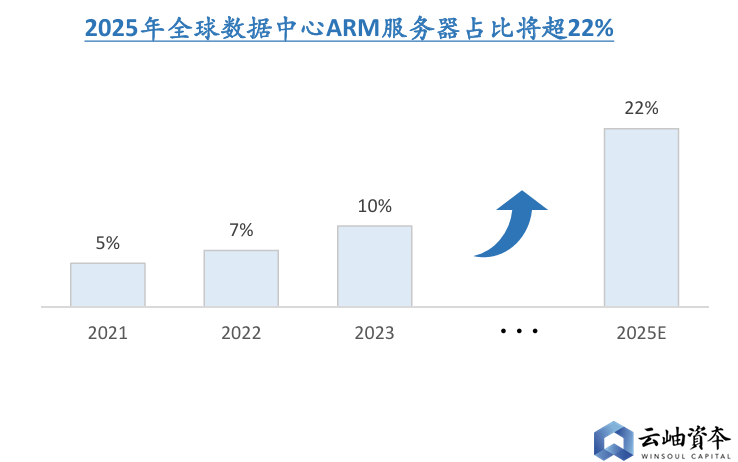

As the demand for computing power continues to increase, the value of CPU, as the core of computer systems, in terms of large model inference and multitasking, has gradually become prominent. In the AI market, companies are paying more and more attention to the "potence-to-value ratio", that is, comprehensively considering the economic investment cost, use effect, and product performance of software and hardware required in the process of large model training and inference. Due to its flexibility and versatility, CPUs have shown high cost performance in multiple AI application scenarios. Due to the increase in the value of CPUs, major manufacturers have also deployed self-developed AI chips + ARM server CPUs. For instance, in 2023, Amazon released the new Trainium2 AI chip and the general-purpose Gravition4 ARM processor, and Nvidia released the GH200 Grace Hopper with ARM architecture Grace CPU and GH 100 Hopper computing GPU. Microsoft also launched cloud AI chips, Microsoft Azure Maia 100 + ARM service CPU, Microsoft Azure Cobalt 100, and domestic technology giants such as Huawei have also built the ARM-based Kunpeng + Da Vinci architecture Ascend AI server. According to the forecasts of authoritative organizations such as TrendForce, the penetration rate of ARM architecture in the data center server market is expected to reach 22% by 2025 as the adoption of ARM-based servers in cloud data centers gradually increases. This data shows that ARM processors are accelerating their penetration into the data center market.

Figure: The proportion of ARM servers in global data centers will exceed 22% by 2025

RISC-V and AI are accelerating their integration, accounting for 30% of mainstream applications by 2030.

The accelerated convergence of RISC-V and AI is a significant trend in the technology landscape that is expected to significantly change the field of computing architecture and chip design in the coming years. According to Winsoul Capital, the number of RISC-V Foundation members will reach 40,037 in 2023, a year-on-year increase of 26%. According to Omdia's research, RISC-V processor shipments will grow significantly in the coming years. RISC-V-based processor shipments are expected to grow by nearly 50% annually from 2024 to 2030, reaching 17 billion processor shipments by 2030. This growth trend reflects the strong momentum and broad application prospects of RISC-V architecture in the market.

While the industrial segment will continue to be the largest application area for RISC-V technology, the strongest growth is expected in the automotive segment. At the same time, the rise of AI will also contribute to the continued rise of RISC-V. It is expected that by 2030, RISC-V will account for 30% of applications in multiple fields, showing its important position and potential in the AI era.

Related:

Outlook and Analysis of China Semiconductor Industry 2024 (1)