Winsoul Capital's analysis shows that with the continuous evolution and upgrading of large-scale AI models, the requirements for the versatility of AI chips are also increasing. Due to the increasing demand for hardware performance, the market space for dedicated chips is shrinking and gradually being eliminated from the market. Due to the need to re-adapt to different models and application scenarios, the DSA (Domain-Specific Architecture) of application-specific integrated circuits (ASICs) is not universal, which makes it difficult to deploy on a large scale and is not cost-effective. In addition, there are many kinds of algorithms in the DSA architecture, which makes it difficult for the application layer to build a unified ecosystem, forming a strong ecological barrier.

At the same time, GPUs have become the mainstream choice of AI computing chips because of their ability to meet a variety of large-scale model computing needs, wide range of applications, short development cycle, ability to mass production to reduce costs, strong parallel computing capabilities, and the existence of software optimization space and a general platform CUDA, which can meet diverse functional requirements.

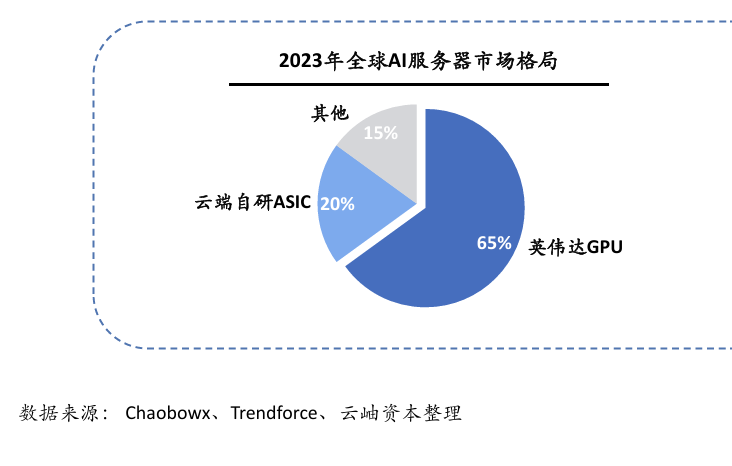

Winsoul Capital further pointed out that the evolution of large models from convolutional neural networks (CNNs) to Transformer models continues, putting forward higher requirements for the versatility of AI chips. Whether the hardware can continue to be compatible with the iterative upgrade of various large models has become an important criterion for measuring its performance. According to 2023 data, 65% of the world's AI servers use GPU architecture chips, indicating that CPU architecture may become the dominant architecture in the future.

Figure: GPU has become the mainstream architecture of AI computing chips

NVIDIA first proposed the concept of GPU in 1999 and launched the GeForce256 graphics processing chip, marking the development of GPU from a single graphics display accelerator to a technology widely used in multiple fields such as graphics rendering, data analysis, and scientific computing. In the GPU market, Nvidia occupies more than 80% of the market share. Nvidia is able to maintain its position as an industry leader because it has world-leading computing power at the hardware level, and through the combination with the software platform CUDA, it provides customers with model training and inference effects that go beyond the performance of a single hardware.

Figure: Global AI server market landscape in 2023

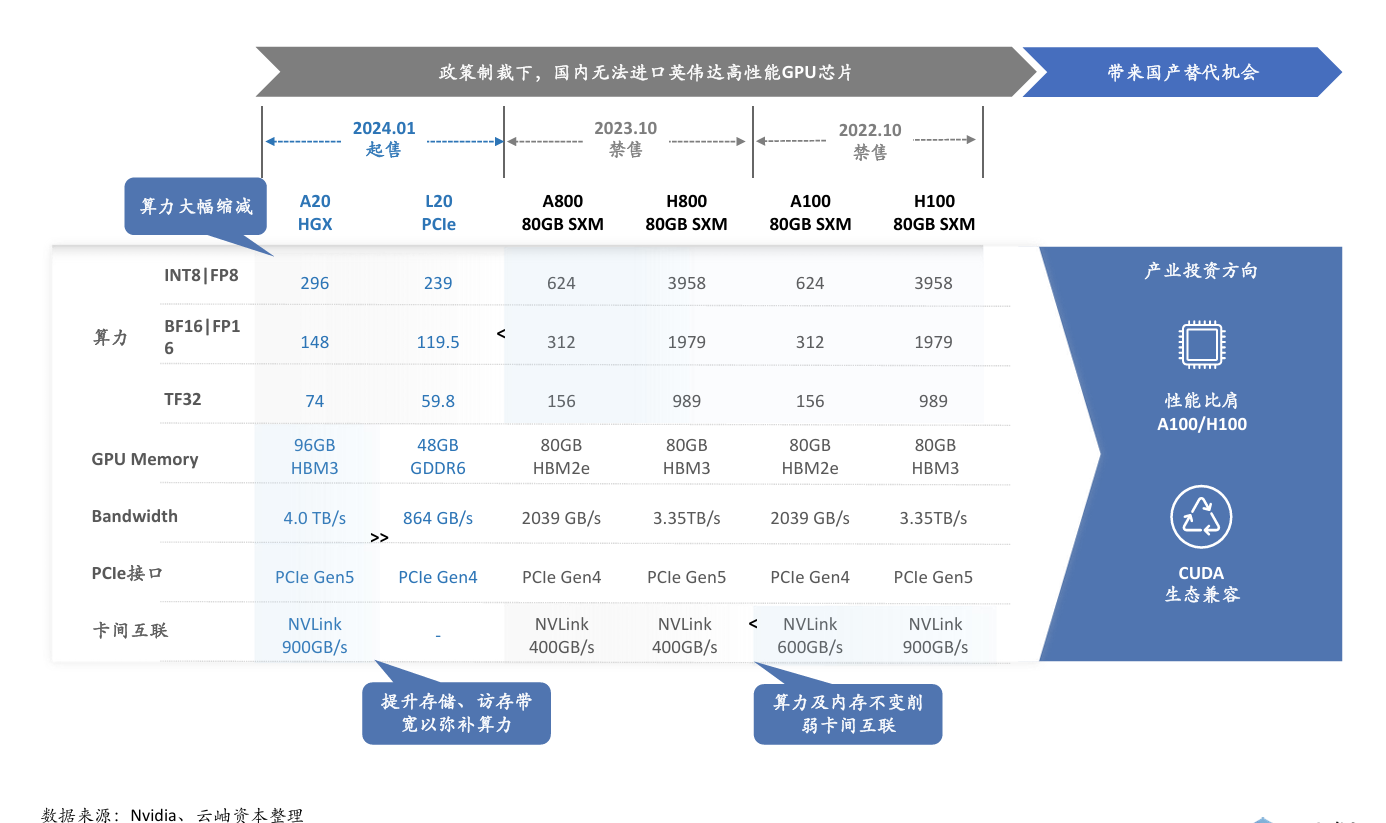

Under policy sanctions, NVIDIA's high-performance GPU chips urgently need domestic substitution

Due to the new control measures implemented by the United States on the export of AI chips, especially restrictions on high-performance AI chip products such as Nvidia's A100 and H100. Under the sanction of the policy, China cannot import Nvidia's high-performance GPU chips, which leads to the urgent demand for domestic high-performance GPUs, and also provides opportunities for the development of domestic GPUs. Driven by policy support and market demand, the domestic GPU industry is developing rapidly. Domestic GPU manufacturers have launched relatively mature products in the fields of graphics rendering and high-performance computing, and continue to catch up with mainstream products in the industry in terms of performance. Most domestic GPU manufacturers are compatible with NVIDIA CUDA to integrate into the large ecosystem and realize client import.

Figure: Domestic GPUs usher in substitution opportunities

The era of hybrid AI is coming, and AI computing at the edge and on the device is ushering in rapid development

From the rhythm of commercial maturity, cloud model training and inference take precedence, and the subsequent computing power demand of the edge end is greater, driving the growth of demand for edge inference chips. With the continuous maturity of AI technology, the focus of AI processing is gradually shifting to the edge, and the era of hybrid AI has arrived, and edge/device AI computing has ushered in rapid development, and significant progress has been made in terms of market size, technological progress, application scenarios, and market demand. Hybrid AI refers to the collaboration between terminal devices and cloud servers to reasonably allocate AI computing tasks according to specific scenarios and times to provide a better user experience and make efficient use of computing resources. According to statistics, the scale of China's end-to-end AI market will reach 193.9 billion yuan in 2023 and is expected to reach 1,907.1 billion yuan in 2028, with an average annual compound growth rate of 58%. According to statistics from IoT Think Tank, the proportion of AI-enabled cellular IoT modules in 2023 was 2%, and this proportion is expected to increase to 9% by 2027, with a compound annual growth rate (CAGR) of 73% in shipments.

Figure: With the advent of the hybrid AI era, edge AI computing is ushering in rapid development

The AI era requires energy-efficient and low-cost integrated storage and computing technologies

In the AI era, the demand for computing power has soared, and the "storage wall" needs to be solved urgently, and the integration of storage and computing has broken through the limitations of the "storage wall", greatly improving computing efficiency and reducing power consumption. With the development of AIOT, the demand for edge AI for intelligent IoT devices is becoming more and more urgent

In the scenario of small computing power, the integrated storage and computing chip will usher in strong demand. With the maturity of technology, the integration of storage and computing in large computing power scenarios is also accelerating, and typical near-memory computing such as HBM and the main chip are connected at the same interposer layer, which effectively improves bandwidth and reduces energy consumption, and is being used more and more.

Related:

Outlook and Analysis of China Semiconductor Industry 2024 (1)

Outlook and Analysis of China Semiconductor Industry 2024 (2)