With the rapid development of AI technology and the rise of generative AI, the demand for computing infrastructure continues to rise, and memory chips are becoming increasingly important as a key component. The improvement of large model capabilities puts forward higher requirements for computing power. As part of the computing infrastructure, memory chips need to meet the needs of AI applications for efficient processing power and large-capacity storage. Winsoul Capital pointed out that the recovery of the industry and the boost of AI demand have led to an upward cycle of memory chips.

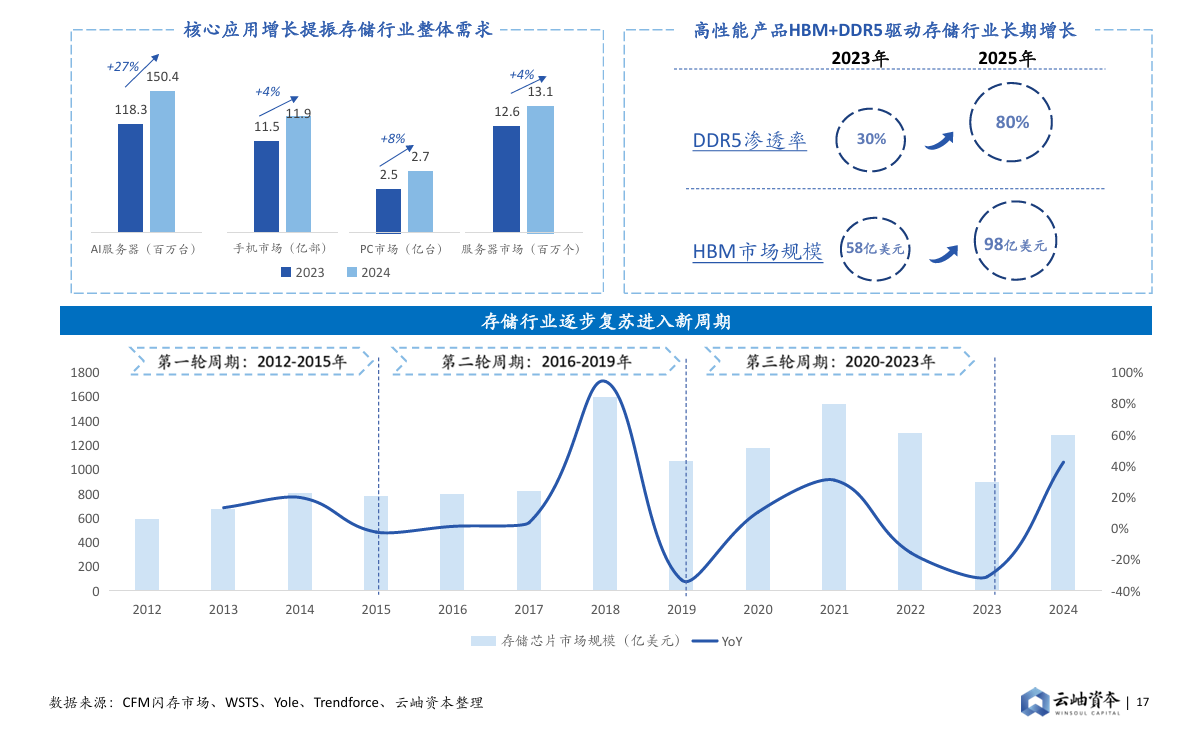

The growth of core applications has increased the overall demand of the storage industry. In 2023, there will be 118.3 million AI servers, which will grow to 150.4 million in 2024, achieving an annual growth rate of 27%. The mobile phone market size will be 1.15 billion units in 2023 and will grow to 1.19 billion units in 2024, with an annual growth rate of 4%. The market size of the computer terminal will be 250 million units in 2023 and will grow to 270 million units in 2024. The server market grew from 12.6 in 2023 to 13.1 (million).

In addition, high-performance HBM and DDR5 products are also driving long-term growth in the storage industry. According to Winsoul Capital, DDR5 penetration is 30% in 2023 and is expected to grow to 80% by 2025. The market size was $5.8 billion in HBM2023 and is expected to grow to $9.8 billion by 2025. The application of memory chips in the field of AI has broad prospects. With the continuous development and popularization of AI technology, memory chips will be widely used in intelligent terminals, cloud computing, big data centers and other fields to provide strong support for AI applications.

Figure: The recovery of the industry is superimposed on the demand for AI, and memory chips have entered an upward cycle

The core bottleneck of GPU performance lies in storage, and memory capacity and bandwidth need to be broken

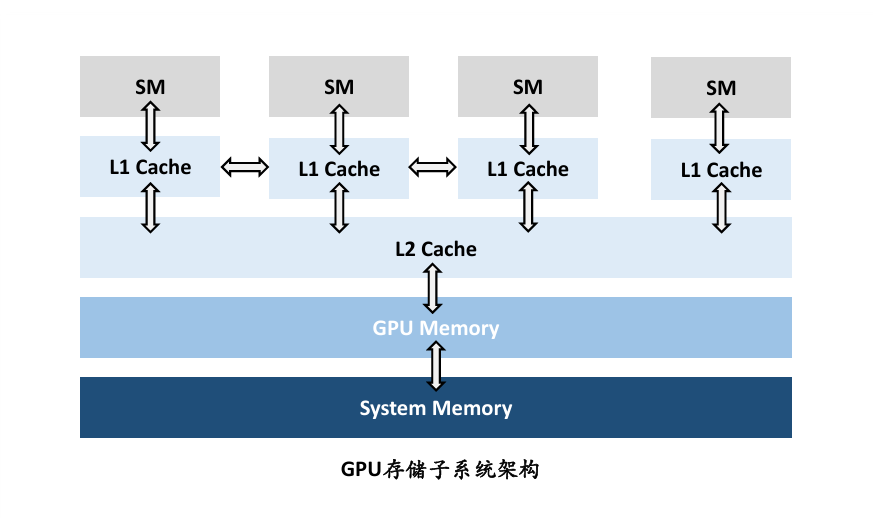

With the continuous increase of model scale, the processing and memory access of massive data put forward higher requirements for GPU computing units and storage subsystems. For GPU architecture, the stacking of computing power cells is no longer a problem, and the number of cells and performance density continue to increase with the improvement of the manufacturing process. However, driven by AI, machine learning, and big data, the amount of data has grown exponentially, and storage technology must also improve to ensure the efficiency and speed of data processing. GPUs rely on high-bandwidth memory to meet the needs of high-speed data exchange, GPUs require more frequent memory access than CPUs, and data access patterns are highly parallel, which requires storage systems that can provide extremely high data bandwidth within millisecond latency. Storage bottlenecks lead to GPUs that cannot be fully utilized, and a large number of GPU computing resources are idle and unable to perform tasks efficiently, resulting in inefficient system performance and increased computing time and energy consumption. At present, most of the time in large-scale model training and inference is waiting for data to arrive at computing resources, and how to efficiently supply data to computing power units through the storage subsystem has become the direction of improvement.

According to Winsoul Capital, the closer to the computing unit, the smaller the storage capacity, the higher the memory access bandwidth, and the more efficient the data transmission. Therefore, there are three directions to improve the performance of the storage subsystem: increasing SRAM capacity, increasing DRAM bandwidth, and increasing SSD bandwidth.

Figure: GPU storage subsystem architecture

HBM has broken through the bottleneck of memory capacity and bandwidth, and the market demand is strong

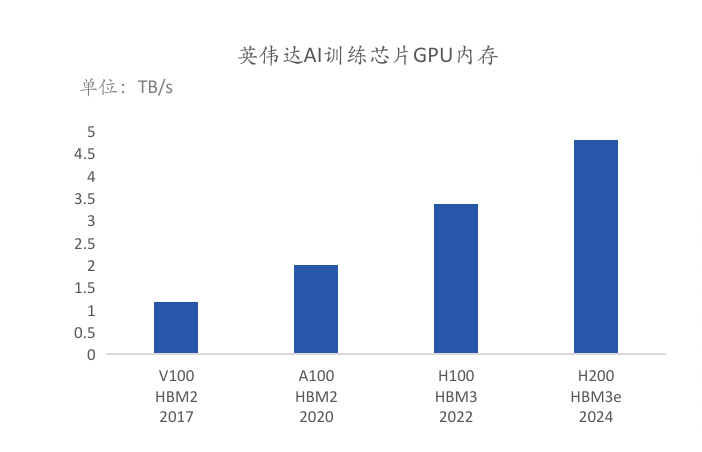

HBM (High Bandwidth Memory) is the highest bandwidth memory standard available, and the 12-16 layers HBM4 is expected to be launched in 2026, increasing the bandwidth to more than 1.4 TB/s per stack and the capacity to 36-48 GB. HBM technology uses 3D stacked DRAM dies and logic dies to form a chiplet, which provides higher bandwidth and lower power consumption, and is an effective solution to solve the GPU memory bottleneck. According to Winsoul Capital, HBM has the advantages of high bandwidth, high capacity, low latency, and low power consumption, and has gradually become the standard configuration of GPUs in AI services. At present, a number of chips for AI training, A100, H100 and H200, launched by NVIDIA, all use HBM video memory.

Figure: Equipped with HBM, the GPU memory bandwidth has been greatly improved

3D Flash creates opportunities for domestic manufacturers to accelerate their rise

At present, the global memory chip market is highly monopolized by overseas companies, but China is the world's most important memory chip consumer market. Therefore, the development of domestic memory chip manufacturers in the field of 3D Flash has a broad market space. Domestic memory chip manufacturers, represented by YMTC, have made significant technological breakthroughs in the field of 3D NAND Flash. For example, YMTC has successfully mass-produced the world's most advanced 232-layer 3D NAND Flash products, marking that the technical strength of domestic enterprises in the field of NAND Flash has successfully entered the international leading ranks. Domestic manufacturers have continuously increased the production capacity of 3D Flash chips by increasing investment and optimizing production processes. For example, YMTC plans to invest a total of US$24 billion, and is expected to achieve a production capacity of hundreds of thousands of wafers per month, with an annual output value of 100 billion yuan. NAND Flash has long relied on imports, and there is a strong demand for localization. The Chinese market accounts for 31% of the world's demand for NAND Flash, and the localization rate is less than 1%. The state has introduced policies such as investment tax reduction to encourage the development of domestic integrated circuits, so as to accelerate the realization of independent chip production and get rid of the situation of being controlled by others. While expanding the domestic market, domestic manufacturers are also actively seeking breakthroughs in the international market. For example, Huawei HiSilicon announced that it will no longer only supply to Huawei, but will sell to all manufacturers around the world, bringing unprecedented development opportunities to the domestic memory chip market.

Related:

Outlook and Analysis of China Semiconductor Industry 2024 (1)

Outlook and Analysis of China Semiconductor Industry 2024 (2)

Outlook and Analysis of China Semiconductor Industry 2024 (3)