In the previous 2024 AI Panorama Report 1, 2, and 3, we introduced the breakthroughs of large language models in 2024, the development of domestic AI large models, and the current challenges faced by large language models. Next, we will introduce the development of the AI industry in 2024.

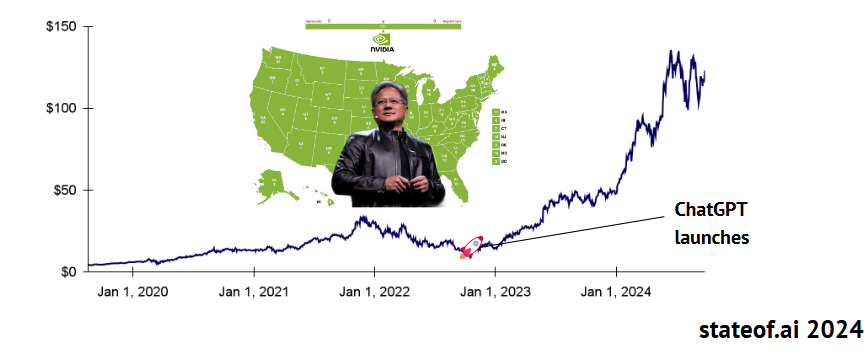

In the first half of this year, Nvidia managed to become the most valuable company in the world. According to the State of AI, Nvidia's ambitions go far beyond that. With the dramatic increase in the demand for hardware, especially to support the next generation of AI workloads, nearly all of the world's major labs rely on NVIDIA's hardware. In June 2024, Nvidia's market capitalization exceeded $3 trillion, becoming the third U.S. company to reach this milestone after Microsoft and Apple. Nvidia looks to remain in a solid market position, driven by a sharp increase in earnings in the second quarter.

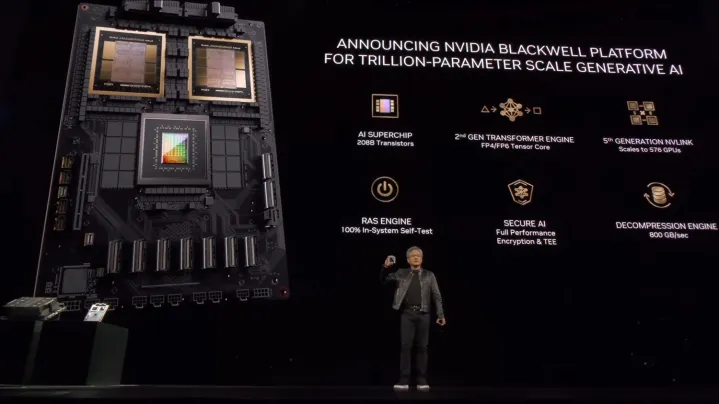

Nvidia's Blackwell GPU series is showing strong pre-sales, and the technical support it provides is also in the spotlight. The new Blackwell B200 GPU and GB200 Superchip are expected to deliver significant performance improvements compared to the previous H100 Hopper architecture. Nvidia says its new product costs and energy consumption are 25 times lower than that of H100.

Although the launch of the Blackwell architecture has been delayed due to manufacturing issues, Nvidia remains confident that it will achieve billions of dollars in revenue by the end of the year. Nvidia founder and CEO Jensen Huang has further expanded the company's reach, presenting a vision for sovereign artificial intelligence. He believes that every country should establish its own LLM program to protect its technological sovereignty, and Nvidia's hardware is clearly the best choice to achieve this.

At the same time, established competitors such as AMD and Intel have still failed to close the gap with Nvidia. AMD is heavily promoting ROCm (its CUDA competitor) and increasing support for the open-source community. However, they have so far failed to provide a strong alternative to NVIDIA's robust networking solutions. Intel, on the other hand, is facing declining hardware sales. Barring regulatory interventions, a major shift in research paradigms, or supply constraints, Nvidia's position in the industry is virtually unassailable.

Pictured: In June 2024, Nvidia became the World's Most Valuable Company

According to the State of AI study, since 2016, $6 billion has been invested in AI chip competitors. The report analyzes the returns of these investors if they put the same amount of money into Nvidia stock, and the results show that the value of the $600 million invested in Nvidia stock has soared to $12 billion (a 20-fold increase), while the value of competing startup shares is only $3.1 billion (a five-fold increase). This suggests that investing in Nvidia has more potential for returns than investing in its start-up competitors.

However, not all analysts are optimistic about Nvidia's stock price continuing to rise in the future. Some analysts believe that the scarcity of the GPU market is fading, and only a handful of companies are currently able to generate consistent revenue from AI-first products. Even for big tech companies, their infrastructure doesn't necessarily support Nvidia's current high valuation. Still, the market seems to be ignoring these voices for now, with more investors inclined to believe the view of early Tesla investor James Anderson that Nvidia could become a "trillion-dollar company" over the next decade.

In computing, the number of large NVIDIA A100 GPU clusters has remained the same, despite the industry's continued increase in funding for the H100 and Blackwell systems. The growth of truly large-scale GPU clusters is mainly focused on the H100. Meta has the world's largest H100 cluster of 350,000, followed by xAI with 100,000 clusters and Tesla with 35,000 clusters. In addition, Lambda, Oracle, and Google are building more than 72,000 H100 clusters. Companies such as Poolside, Hugging Face, DeepL, Recursion, Photoroom, and Magic have also built up more than 20,000 H100 clusters. The first GB200 clusters are also coming online, with the Swiss National Supercomputing Center already deploying 10,752 GB200 clusters, and OpenAI expects to deploy 300,000 by the end of next year.

Figure:Nvidia Blackwell Platform(Image:State of AI)

In addition, Nvidia remains the top choice for AI research papers. According to statistics, Nvidia used 19 times more AI research papers last year than all its peers. This year, although the lead has narrowed, it is still around 11 times. This is partly due to a 522% increase in TPU usage, while the gap with Nvidia has narrowed to 34 times. At the same time, Huawei's Ascend 910 chip usage increased by 353%, the use of large AI chip startups increased by 61%, and Apple chip research also increased.

Usage of NVIDIA's A100, H100 (+477%) and 4090 (+262%) series continues to grow (up 59% year-over-year). And the usage rate of the V100, which has been used for 7 years (-20%), is still half that of the A100, which further proves the long-term use value of NVIDIA products in AI research.

When it comes to AI chip start-ups, Cerebras appears to be leading the way, using its wafer-level system to drive a 106% increase in the number of AI research papers. Groq's recently launched LPUs are also starting to make their mark in AI research papers. Meanwhile, Graphcore will be acquired by SoftBank in mid-to-late 2024. Unlike these AI chip startups, most of them have shifted from sales systems to open model-based inference interfaces, seeking to find new breakthroughs in the competition with NVIDIA.

Related: